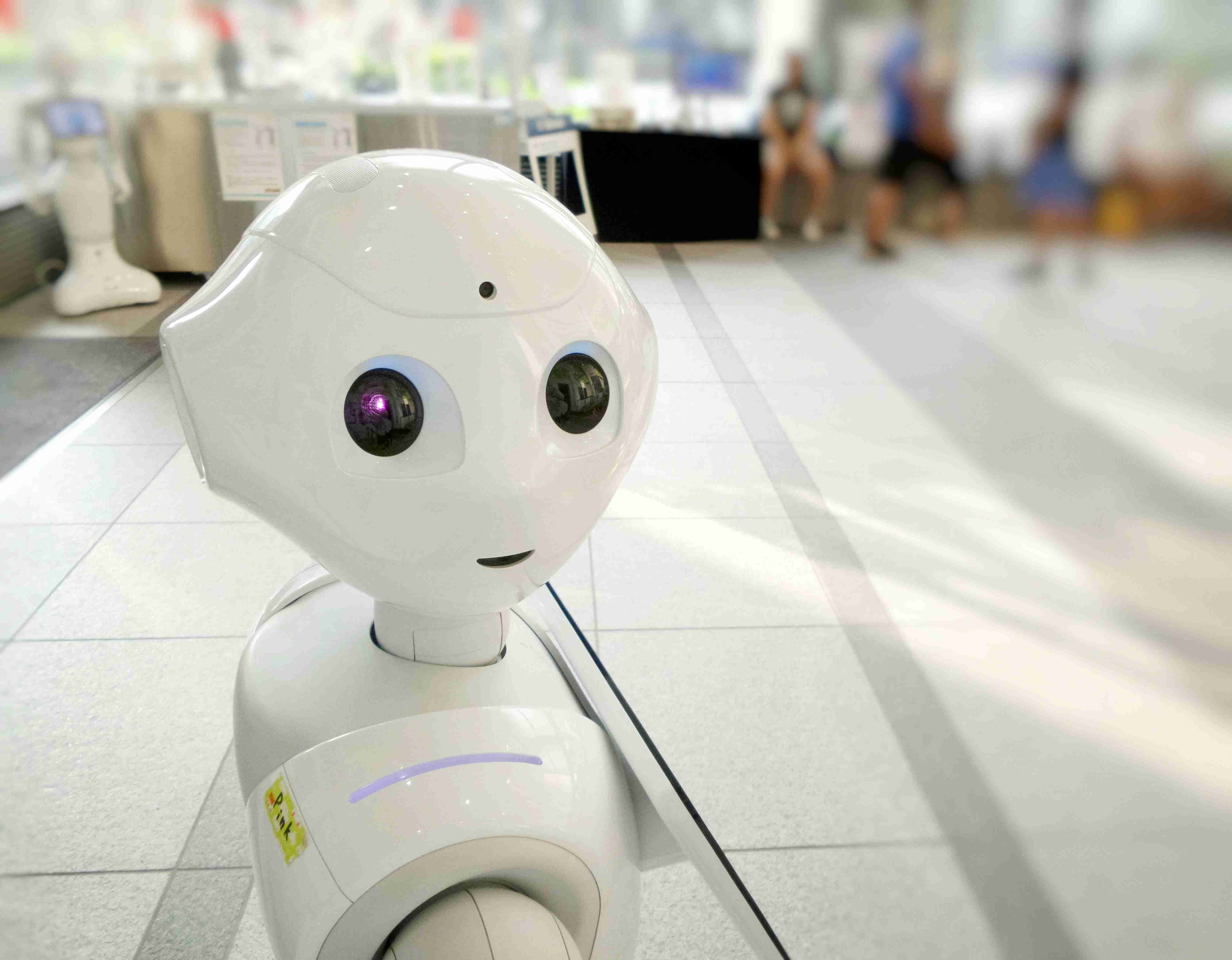

The Solution

thinkbridge architected and implemented a modular AI system built around secure access, real-time data, and domain context orchestration.

This included:

1. Secured AI Infrastructure

- Deployed ChatGPT Enterprise within a private Azure network

- Integrated SAML-based SSO for role-based access

- Auditable logs and full observability for IT and compliance teams

2. Real-Time Business System Plugins

We built OpenAPI-compatible plugins to securely connect ChatGPT with:

- ATS – for candidate status and requisition tracking

- HRIS – for onboarding, employee, and benefits queries

- CRM – for client engagement and sales data

- ITSM tools – for ticket lookups and support status

This allowed natural-language interactions such as:

3. Unstructured Knowledge Retrieval (RAG + Pinecone)

We implemented a Retrieval-Augmented Generation (RAG) pipeline using:

- OpenAI embeddings

- LangChain orchestration

- Pinecone vector DB

This made internal documents—from policies to process guides—searchable via semantic queries.

ChatGPT now responded with relevant, cited content, like: “Who approves international relocation packages?” or “Summarize the background check escalation SOP.”

4. High-Speed Caching Layer for Cost Optimization

To reduce inference overhead, we implemented:

- Redis for fast memory caching

- PostgreSQL for structured query logs

- Frequently asked queries were cached—cutting redundant API calls by over 60% and improving latency across the platform

.webp)

_11zon.jpg)

_11zon.jpg)

.png)

.png)